Ways Rutgers Is Shaping the AI Revolution

Rutgers researchers have been working in the field of artificial intelligence decades before AI started to become a common part of our lives through face recognition software, social media algorithms, chatbots, digital assistants such as Alexa and Siri and in many other ways.

The term artificial intelligence describes computers and other machines that have been programmed to make predictions, recommendations, or decisions (often using massive data sets and large-scale optimization) in a way that can be understood as using knowledge to achieve goals – which can appear to emulate aspects of human work and cognition.

It’s the technology that makes it possible for Amazon to make recommendations based on purchases, powers Google searches and automatic financial trading, says Matthew Stone, a professor of computer science in the School of Arts and Sciences at Rutgers University-New Brunswick, who teaches courses to prepare students to be the next generation of leaders in AI.

In 1968 Saul Amarel, founder of Rutgers’ computer science department, wrote a paper about the technology that put him at the forefront of the AI movement. And today, Rutgers is still breaking ground in the AI field. Rutgers researchers are exploring ways to use AI to fight crime, improve cybersecurity and expand job opportunities for people with disabilities. They are also tackling legal guidelines and philosophical questions surrounding the future.

Here are just some of the ways that Rutgers researchers are using AI to address critical needs in the world.

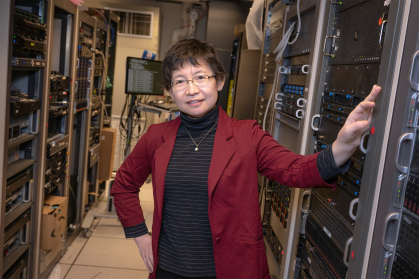

We Are Using AI to Thwart Cyber Attacks

Rutgers computer scientist Jie Gao is working with some of the top leaders in her field to develop cybersecurity methods that use artificial intelligence to safeguard against threats.

Gao is part of the AI Institute for Agent-based Cyber Threat Intelligence and Operation, a consortium of researchers from 11 schools and one of seven new national AI research institutes.

“We want to develop next generation security,” Gao says. “The idea is to develop an automated AI system that will recognize potential threats, communicate with other agents, and develop a response mechanism.”

Rutgers Law Professor Takes the Lead on Federal Regulation of AI

What are the ethical, legal, personal privacy, and economic ramifications of the AI evolution?

Ellen P. Goodman, a Rutgers law professor, is leading a federal initiative to ensure that artificial intelligence used in a growing number of sectors including education, employment, finance, and health care is trustworthy and safe. The Artificial Intelligence Accountability Policy Report she authored was released March 27.

“AI is being incorporated into all kinds of and processes like employment, health care, criminal justice, information and legal services, and transportation and climate response," Goodman said. "These tools are here and they are spreading so the question is, how can they be managed in a way to maximize the benefits and mitigate the risks?”

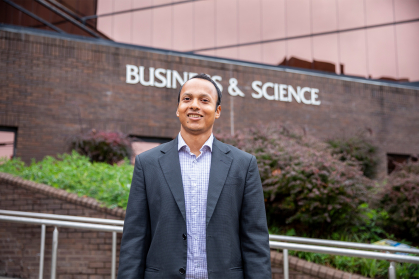

Rutgers Researcher Studies Using AI to Prevent Serious Community Threats

Computer scientist Sheikh Rabiul Islam is examining how AI could be used to address serious community threats: potential school shootings and alcohol-related road fatalities.

Islam and his team sought to develop preventive measures against school shootings using AI. While these incidents are shocking when they happen, offenders often exhibit warning signs on social media that go undetected or unreported. The researchers used AI to evaluate online posts.

Islam’s research has also focused on addressing bias in policing and using AI to track and reduce DUI-related deaths.

"Many people are concerned about the potential impact of AI, but I think it has the potential to foster peace and justice around the globe," Islam said.

Researchers Are Investigating Wearable Robots to Expand Job Opportunities

Two teams of researchers are working to remove barriers to the physical challenges of work.

In one project, Rutgers faculty are collaborating with engineers at North Carolina State University and New York University to develop a soft wearable robot called “PECASO” that will integrate AI for most advanced control. The technology, supported by a $1.9 million National Science Foundation (NSF) grant, will assist workers who have restrictions in upper limb mobility, including people with multiple sclerosis, cerebral palsy, carpal tunnel syndrome, and other illnesses and injuries.

It’s expected to enhance employment opportunities for people with disabilities in the retail, warehouse, and manufacturing sectors.

In another project, Rutgers researchers are using a $1.1 million NSF grant to develop a soft, safety-sensing robot exoskeleton for construction workers.

Powered by machine learning and mixed reality technology, the exoskeleton helps workers with strength, balance, and injury prevention when they are squatting, kneeling, or bending to perform tasks.

Our Research Can Help Combat the Dark Side of AI

Deepfakes – very realistic but completely phony visual or sound content created by artificial intelligence – can be used for exploitation, propagandizing, and other nefarious purposes.

Rutgers alumnus Eric Wengrowski and School of Engineering professor Kristin Dana developed an artificial intelligence technology that makes it easier to detect these deepfakes – helping businesses and organizations protect their media assets and intellectual property.

Rutgers Faculty and Alumnus Work to Ensure AI Is Accessible Worldwide

As chair of data science at University of Pretoria, South Africa, Rutgers alum Vukosi Marivate is teaming up with Critical AI @ Rutgers, an interdisciplinary initiative that deepens understanding of the emerging technology to make sure no culture is left out of the AI evolution.

Marivate has been working on addressing AI’s ability to recognize different languages since completing his doctoral degree from Rutgers in computer science. After returning to South Africa, he and others in his field realized that AI was moving fast globally, and they had to get on board before the continent was left behind. They also realized they couldn’t wait around for others to assist them.

“We didn't see the gap was going to be filled by any other constituents, whether it's government, the private sector, or academia,” Marivate said. “What you need is to have more people doing AI.”

We Are Using AI to Improve Bridge Safety

Nearly half of all bridges across the United States are over 50 years old, with many considered structurally deficient and facing additional stressors like increased traffic loads and climate change.

Led by civil and environmental engineer Nenad Gucunski, researchers at Rutgers Center for Advanced Infrastructure and Transportation are using artificial intelligence to rapidly scan enormous amounts of data and evaluate bridge data and assist with queries.

The Advanced Bridge Technology Clearinghouse is a clearinghouse of the latest innovations in bridge safety incorporating AI technology. The platform was created thanks to a $5 million U.S.Department of Transportation grant.

“As these bridges age and face additional stresses from climate change and increased traffic loads, there is an urgent need for innovative solutions that can ensure the long-term safety and sustainability of these structures,’’ Gucunski said.

Our Researchers Are Using AI to Predict Disease

Rutgers scientists have created a first-of-its-kind AI-powered software – IntelliGenes – that can produce personalized disease predictions.

The software combines artificial intelligence and machine-learning approaches to predict disease in individuals.

“IntelliGenes can support personalized early detection of common and rare diseases in individuals, as well as open avenues for broader research ultimately leading to new interventions and treatments,” said Zeeshan Ahmed, a faculty member at Rutgers Institute for Health, Health Care Policy and Aging Research.

Rutgers Philosopher Examines How AI Could Pose Major Risks to Society

With all of its benefits can come risk. Rutgers philosopher Cameron Kirk-Giannini critically examines two major philosophical arguments that claim to show how AI could pose large-scale risk to society.

“My view is that we should approach technologies that have the potential to cause serious harm with a safety mindset – we should operate under the assumption that they will cause serious harm if we don't take precautions,’’ Kirk-Giannini said. “My hope is that drawing attention to arguments for AI risk will help to move us in the right direction. ‘